PostgreSQL databases of a cluster into one script file. The script file contains SQL commands that can be used as input to psql to restore the databases. It does this by calling pg _ dump for each database in a cluster.

To backup all databases , you can run the individual pg _ dump command above sequentially, or parallel if you want to speed up the backup process. What are pg_dump and pg_dumpall? When used properly pg _ dump will create a portable and highly customizable backup file that can be used to restore all or part of a single database. Because pg _ dump only creates a backup of one database at a time, it does not store information about database roles or other cluster-wide configuration.

To store this information, and back up all of your databases simultaneously, you can use pg _dumpall. This currently includes information about database users and groups. CREATE TABLE, ALTER TABLE, and COPY SQL statements of source database. To restore these dumps psql command is enough.

Most Postgres servers have three databases defined by default: template templateand postgres. Also you will learn how to restore datbase backup. Below is some connections options which you can use for connecting remote server or authenticated server with all queries given in this article. The pg _ dump command which worked before, in 7. Is this by design, or by accident? There is no package available to get just these files.

For offloading the data we are using the pg _ dump utitlity pg _ dump -t schema. For my understanding we are dumping all the data from the table till today to the path mentioned. We are asked to schedule either weekly or monthly incremental data to the new server.

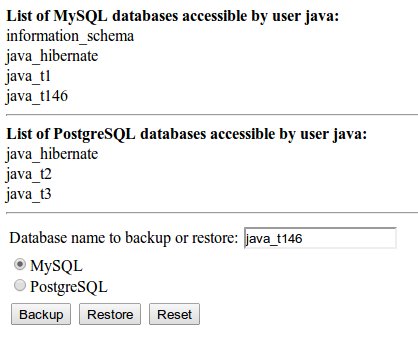

Here are some scripts which will backup all databases in a cluster individually, optionally only backing up the schema for a set list. The reason one might wish to use this over pg _dumpall is that you may only wish to restore individual databases from a backup, whereas pg _dumpall dumps a plain SQL copy into a single file. Should I run this statement for each and every table or is there a way to run a similar statement to export all selected tables into one big sql big.

Any help will be appreciated. I used it to write my own as show below. ToDo : backup full schema without data. Dumping Using pg_dump and pg_dumpall. The pg_dump utility can be used to generate a logical dump of a single database.

If you need to include global objects (like uses and tablespaces) or dump multiple databases, use pg_dumpall instead. The output generated by pg_dump is not a traditional “backup”. It omits some information that makes it. Then you have to use pg_dump for each and every database you want to dump to a file. Don't forget to use pg_dumpall with -g option to save roles and tablespaces.

You can list all you databases with psql. To save time, if you would like to backup all of the databases in your system, there is a utility called pg _dumpall. They syntax of the command is very similar to the regular pg _ dump comman but it does not specify the database. Getting a logical backup of all databases of your Postgresql server.

For each one of these files then we assign its name and date to a filename and then, after we execute vacuumdb we use pg _ dump with gzip to actually create the backup and output it to the file. The other two lines (size and kb_size) are used to calculate the size of the.

Brak komentarzy:

Prześlij komentarz

Uwaga: tylko uczestnik tego bloga może przesyłać komentarze.